The last few days I’ve been playing with VS2010 beta 2 and ASP.NET MVC2. I hadn’t done that much with the first version of ASP.NET MVC, but I hope to get some more time to play with version 2.

After seeing how easy validation of data input can be in the PDC video on ASP.NET MVC2 from Scott Hanselman, I wanted to try it out for myself. I had already started working on an MVC2 project and it used manual validation. So I added the data annotation attributes on the class and….it didn’t work… Turns out that the thing that transforms the HTTP form data into a .NET object, the Model Binder, needs to support it and the default binder is very simple and does not support it. If you talk to a database with the Dynamic Data Framework somewhere along the line a model binder is used that does support it and apparently Scott used dynamic data in his demo.

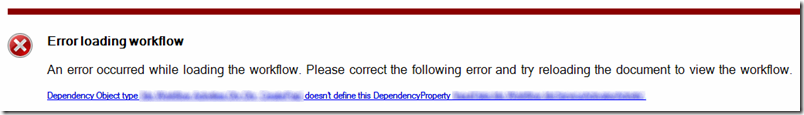

The project I had started was using the ADO.NET Entity Framework which does not have support for data annotations (yet) and I didn’t feel like switching to the Dynamic Data Framework just for the validation. But then it turns out you can easily(?) write your own binder and make it support the data annotation attributes. Or rather, extend the default binder. I found this at a blog post by Brad Wilson about using data annotations in ASP.NET MVC and it even pointed me to the sample project he talked about in his blog post. I downloaded it and tried to build it as a .NET 4.0 project, but since the sample was written for MVC1 and .NET 3.5SP1 I got compiler errors about missing functions at first and when I got it to compile I got a nasty runtime error:

This property setter is obsolete, because its value is derived from ModelMetadata.Model now.

The compiler errors about the missing function took some reasoning, but you need the change this code which appears in two places and is slightly different in the second place:

if (!attribute.TryValidate(bindingContext.Model, validationContext, out validationResult))

{

bindingContext.ModelState.AddModelError(bindingContext.ModelName, validationResult.ErrorMessage);

}

Into this:

validationResult = attribute.GetValidationResult(bindingContext.Model, validationContext);

if(validationResult != ValidationResult.Success)

{

bindingContext.ModelState.AddModelError(bindingContext.ModelName, validationResult.ErrorMessage);

}

After some searching for the runtime error I found an answer on StackOverflow. The code discussed in that article is a little different then the code in the binder sample I am using, but it was enough for me to get it to work. Basically you need to change this:

var innerContext = new ModelBindingContext()

{

Model = propertyDescriptor.GetValue(bindingContext.Model),

ModelName = fullPropertyKey,

ModelState = bindingContext.ModelState,

ModelType = propertyDescriptor.PropertyType,

ValueProvider = bindingContext.ValueProvider

};

Into this:

var innerContext = new ModelBindingContext()

{

ModelMetadata = ModelMetadataProviders.Current.GetMetadataForType(() => bindingContext.Model, propertyDescriptor.PropertyType),

ModelName = fullPropertyKey,

ModelState = bindingContext.ModelState,

ValueProvider = bindingContext.ValueProvider

};

And it will work.

Next was finding out how to use it exactly. The blog post about the sample binder would receive the entire model when the function is called on the controller, but my code only received an identifier and I need to get the rest of the data out of the database. So just using “ModelState.IsValid” wasn’t enough in my case. I also needed to call “UpdateModel” to have the binder transform the input data in the .NET object that I use in my code. The problem I ran into there was that upon updating the model, the validation rules would also run and an exception would be thrown when the validation failed. This code gives me the exception:

[AcceptVerbs(HttpVerbs.Post)]

public ActionResult Edit(string lastName, FormCollection collection)

{

Person person = new Person();

UpdateModel(person);

PersonController.personStore[lastName] = person;

return RedirectToAction("Details", person);

}

It turns out I should not be using UpdateModel, but rather TryUpdateModel. That allows me to update the model and the validation to fail without resorting to exception handling. Like so:

[AcceptVerbs(HttpVerbs.Post)]

public ActionResult Edit(string lastName, FormCollection collection)

{

Person person = new Person();

bool isModelValid = TryUpdateModel(person);

if(isModelValid == true)

{

PersonController.personStore[lastName] = person;

return RedirectToAction("Details", person);

}

else

{

person.LastName = lastName;

return View(person);

}

}

Doing it like that gives me the nice error message I was looking for: